Size and Life and Death by Moral Machine Logic

Faced with an inescapable choice, who will live and who will die? That’s the question that researchers put to a massive global sample. It was a hypothetical question prompted by self-driving vehicles. Should the vehicle swerve to avoid hitting a large group of people? Even if it means certain death for a smaller group? Should the car swerve to avoid a baby or an old person? An overweight person or an athlete? These are the questions that MIT’s Moral Machine posed.

Although this paper is about a moral design for self-driving cars, it gives a fascinating insight into bias. Especially for us, weight bias.

Spared Before Cats, Criminals, and Homeless People

This online experiment is a variation of the classic Trolley Problem. And as we’ve written before, the idea of putting a lower value on a large person is nothing new in the Trolley Problem. But in their online experiment, Edmond Awad and colleagues have taken it much further.

This online experiment is a variation of the classic Trolley Problem. And as we’ve written before, the idea of putting a lower value on a large person is nothing new in the Trolley Problem. But in their online experiment, Edmond Awad and colleagues have taken it much further.

Awad et al collected data from 40 million decisions in ten languages by millions of people in 233 different countries. Their purpose was to provide input into an ethical framework for artificial intelligence in self-driving cars. Respondents made choices in different moral dilemmas that a car might encounter. If an accident is unavoidable, should the car swerve to spare the life of a baby? Even if it means killing an old woman?

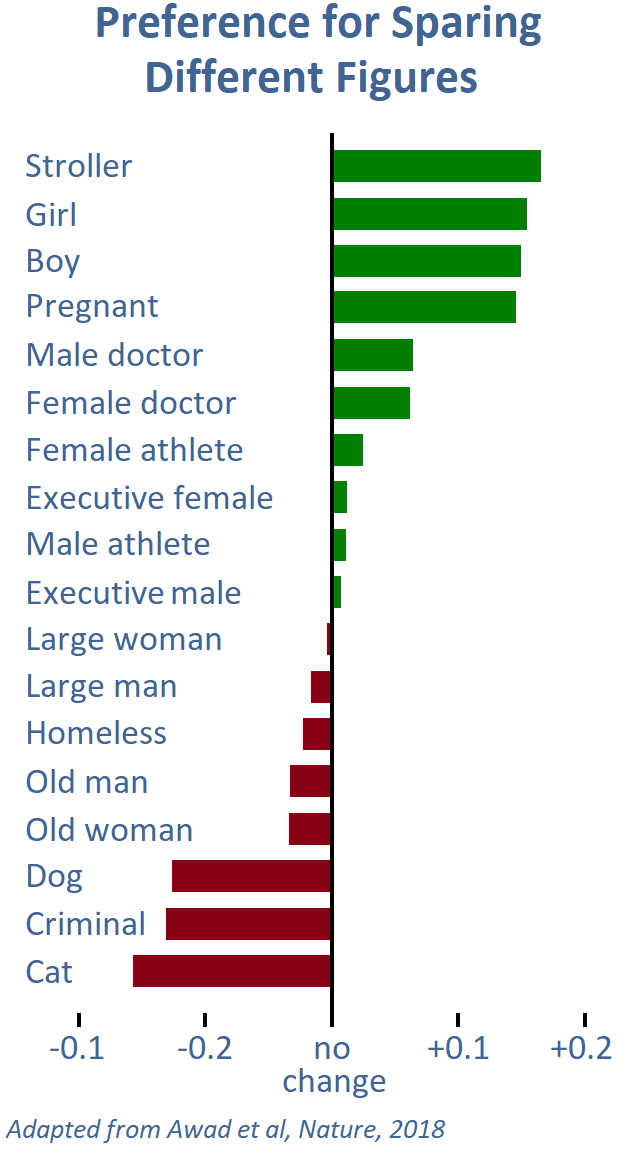

Some preferences were universal. People come out ahead of cats and dogs. Respondents favor younger people over older ones. The lives of the many come before the lives of a few. Criminals and homeless people don’t get much sympathy.

Not surprisingly – knowing how common weight bias is – higher weight people lose out to athletes. But it was not the strongest moral bias the study found. And it wasn’t universal. Southern cultures showed this bias more than others. That grouping of cultures included France and many countries of South and Central America. Eastern countries showed much less of a bias for “sparing the fit.”

Likewise, men and younger people held more bias for the fit. More religious respondents tended to reject it.

Coding Our Biases into Machines?

So does this suggest that we will program our machines with pernicious bias? And who decides? Does a massive sample justify rigging our systems with bigotry?

Machines are already making many little decisions for us. And bias guides our actions every day. We would do well to question more of these biases before hard wiring them even more deeply into our lives. Iyad Rahwan, senior author on the Moral Machine paper, offers a masterful understatement:

We should take public opinion with a grain of salt.

Click here for Awad’s paper in Nature. Click here, here, and here for further perspective.

Green Traffic Light Person, photograph © Hugo / flickr

Subscribe by email to follow the accumulating evidence and observations that shape our view of health, obesity, and policy.

October 27, 2018